From @xuOptimizingStatisticalMachine2016

The methodology poses text simplification as a paraphrasing problem: given a text, rewrite it under the constraint that the output should be simpler than the input, while preserving as much as the input as possible and maintaining the well-formedness of the input.

Sari

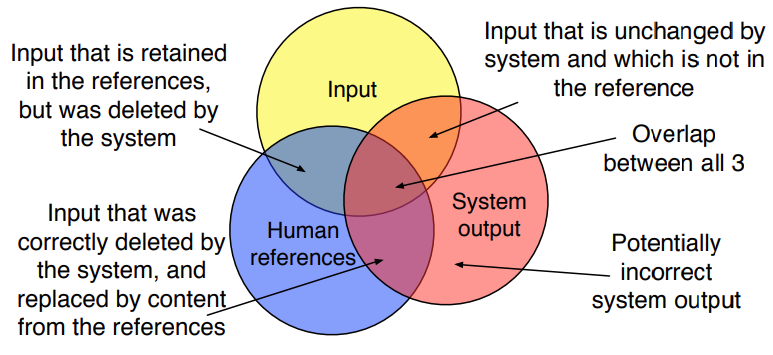

Sari compares system output against references and against the

input sentence. It explicitly measures the goodness of words that are added, deleted and kept by the system.

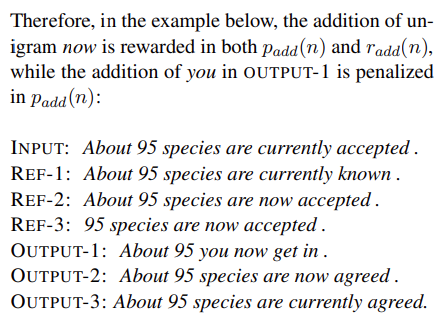

Addition operations are rewarded where the system output was not in input but occurred in any of the references i.e. .

By using as a binary operator of occurence of n-grams in a given text:

Then, we can define a n-gram precision and recall for addition operations:

Also, words that are retained in both the output and references should be rewarded. is used to mark the n-grams counts over with fractions, e.g. if a unigram occurs 2 out of the total references the its count is weighted by a in computation of the precision and recall:

where:

For deletion only precision is used because over-deleting hurts readability much more significantly than not deleting:

Where:

Then, for add, keep and del the F-Score is calculated through the use of the precisions and recalls.

Finally:

where

Results

SARI correlates highly with human judgments of simplicity, while BLEU exhibits higher correlation on grammaticality and meaning preservation (obviously, since IT’S NOT designed to do simplification).

from @alva-manchegoDataDrivenSentenceSimplification2020

Sari is only useful as a proxy for simplicity gain assessment, limited to lexical simplifications and short distance reordering, despite more text transformations being possible.