From @rafailovDirectPreferenceOptimization2023.

Direct Prefercen Optimization, or DPO, optimizes for human preferences while avoiding reinforcmente learning steps: existing methods, like Reinforcement Learning with Human Feedback first fit a reward model to a dataset of prompts and humen preferences over pair of responses, then use Reinforcement Learning to find a policy that maximizes the learned reward.

DPO directly optimizes for the policy best satisfying the preference with a simple classification objective, fitting an implicit reward model whose corresponding optimal policy can be extracted in closed form.

The key insight is to leverage an analytical mapping from reward functions to optimal policies, basically transforming a loss function over reward functions into a loss function over policies.

This enables the avoidance of fitting an explicit, standalone reward model, while still optimizing under existing models of human preferences, such as the Bradley-Terry model.

Formally

Starting from the same RL objective as RLHF:

is demonstrated that the optimal solution to the KL-constrained reward maximization takes the form:

Where is the partition function. Even using Maximum Likelihood Estimation to estimate of the ground truth reward function , it still expensive to estimate the partition function , which makes this representation hard to utilize in practice.

However, we can rearrange the equation to express the reward function in terms of its corresponding optimal policy , the reference policy , and the unknown partition function . Specifically, the logarithm of both sides is taken and then with some algebra we obtain:

We can apply the reparameterization to the ground-truth reward and corresponding optimal model . Fortunately, the Bradley-Terry depends only on the difference of rewards between two completition.

Substituting the reparameterization in the equation for , the partition function cancels and we can express the human preference probability in terms of only the optimal policy and reference policy . Thus, the optima RLHF policy under the Bradley-Terry model satisfies the preference model:

While this uses the Bradley-Terry model, similarly the expression is derivable under the more general Plackett-Luce model.

Now, having the probability of human preference data in terms of the optimal policy rather than the reward model, we can formulate a Maximum Likelihood object for a parametrized policy . Analogous to the reward modeling approach in RLHF, the policy objective becomes:

This way, by using an alternative parameterization, we fit an implicit reward whose optimal policy is simply . Moreover, since is equivalent to fitting a reparametrized Bradley-Terry model, it has certain theoretical properties, such as consistencies under suitable assumption of the preference data distribution.

Mechanistic Understanding

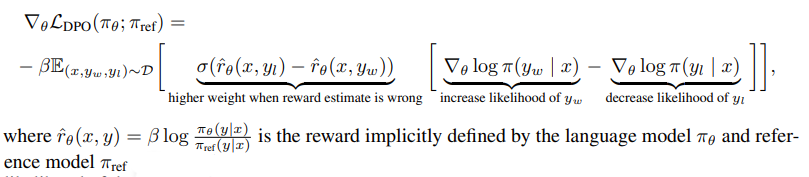

The gradient with respect to parameters can be written as:

Intuitively, the gradient of the loss function increases the likelihood of the preferred completions and deacreases the likelihood of dispreferred completitions . Importantly, the examples are weighted y how much higher the implicit reward model rates the dispreferred compeltions, scaled by i.e. how incorrectly the implicit reward models orders the completitions, accounting for the strength of the KL constraint.

DPO Pipeline

The general DPO pipeline is as follows:

- Sample competitions for every prompt , and label them with human preferences to construct the offline dataset of preferences ;

- Optimize the Language model to minimize for the given and and desired .

To reuse publicly available datasets, rather than generating samples and gathering human preferences, we initialize since the preference dataset are sampled using ( is the fine-tuned Language Model from which the preference labeled generations were extracted from for the construction of publicly available preference datasets).

However, when isn’t available, we initialized by maximizing likelihood of preferred completitions , that is, .

This procedure helps mitigate the distribution shift between the true reference distribution which is unavailable, and used by DPO.